PostgreSQL 進階:不只是 SELECT * FROM

上一篇 建立了 PostgreSQL 的單節點部署基線——安裝設定、PgBouncer 連線池、備份還原、基礎調校。那些是「活下去」的技能。這篇要談的是「活得好」的技能:當使用者抱怨搜尋太慢、Dashboard 載入要 5 秒、JSONB 欄位的查詢吃掉所有 CPU——你需要的不是換資料庫,而是把 PostgreSQL 用對。

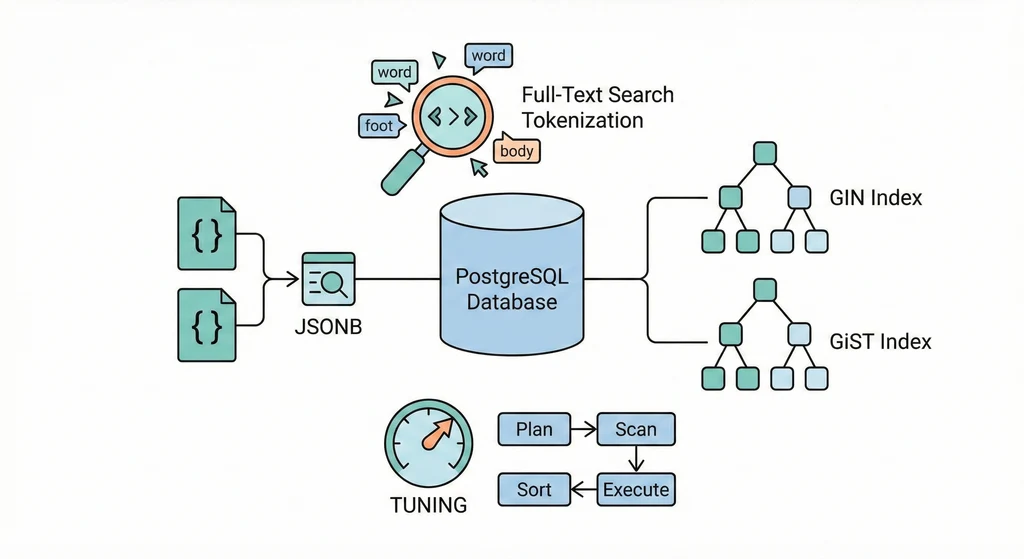

PostgreSQL 內建 Full-Text Search、支援 JSONB 索引、有六種 Index 類型、有完整的 query planner 和執行計畫分析工具。大部分團隊在喊出「我們需要 Elasticsearch」之前,其實連 PostgreSQL 本身的能力都沒用到 30%。這篇的目標:讓你在搬出 Elasticsearch 之前,先把 PostgreSQL 的進階功能壓榨乾淨。

架構概覽

flowchart TD subgraph FTS["Full-Text Search"] Doc["文件內容"] --> TSV["tsvector\n文字向量化"] Query["搜尋詞"] --> TSQ["tsquery\n查詢解析"] TSV --> GIN["GIN Index\n倒排索引"] TSQ --> GIN GIN --> Rank["ts_rank\n相關性排序"] end subgraph Partitioning["Table Partitioning"] BigTable["大型表"] --> P1["Partition 1\n按時間"] BigTable --> P2["Partition 2"] BigTable --> P3["Partition 3"] end subgraph Replication["Replication"] Primary["Primary\n寫入"] --> Replica1["Replica 1\n讀取"] Primary --> Replica2["Replica 2\n讀取"] end subgraph MVCC["MVCC 並行控制"] TX1["Transaction 1"] --> Snapshot["Snapshot\n版本快照"] TX2["Transaction 2"] --> Snapshot end

為什麼 PostgreSQL 能撐全站搜尋

多數開發者一聽到「搜尋功能」就直覺想到 Elasticsearch。先看一組數據:

| 場景 | PostgreSQL FTS | Elasticsearch |

|---|---|---|

| 10 萬篇文章全文搜尋 | ~5ms (GIN index) | ~3ms |

| 100 萬篇文章全文搜尋 | ~15ms (GIN index) | ~5ms |

| 1000 萬篇文章全文搜尋 | ~80ms (GIN index) | ~10ms |

| Typo tolerance (fuzzy) | 不支援原生 | 原生支援 |

| Faceted search | 需要手刻 | 原生支援 |

| 中文分詞 | 需要 extension | 原生支援 |

| 運維複雜度 | 零(已經有 PG) | 高(JVM heap、cluster 管理) |

| 資料一致性 | 即時(同一個 transaction) | 最終一致(有延遲) |

如果你的場景是技術部落格(幾千到幾萬篇)、中型電商(幾十萬商品)、內部知識庫——PostgreSQL FTS 綽綽有餘。不用多維護一套 Elasticsearch cluster、不用處理資料同步的最終一致性。

PostgreSQL FTS 夠用的條件:文件數量 500 萬以下、不需要 typo tolerance、搜尋延遲 50ms 以內可接受、不需要複雜的 faceted search、主要語言是英文或有現成分詞 extension。符合?用 PostgreSQL FTS。不符合?再考慮 Elasticsearch。

Full-Text Search 完整指南

基礎概念

tsvector — 文件經過 tokenize、normalize、去除 stop words 後的表示:

SELECT to_tsvector('english', 'The quick brown foxes jumped over the lazy dogs');

-- 結果:'brown':3 'dog':9 'fox':4 'jump':5 'lazi':8 'quick':2

-- 'The' 被移除(stop word)、'foxes' → 'fox'(stemming)、'jumped' → 'jump'tsquery — 搜尋條件的結構化表示,支援布林運算:

SELECT to_tsquery('english', 'database & performance'); -- AND

SELECT to_tsquery('english', 'database | search'); -- OR

SELECT to_tsquery('english', 'database & !oracle'); -- NOT

SELECT to_tsquery('english', 'full <-> text <-> search'); -- Phrase(連續出現)

SELECT to_tsquery('english', 'database <2> tuning'); -- 接近搜尋(隔 N 個詞內)Inverted Index — FTS 的核心資料結構是倒排索引:word → [doc_1, doc_5, doc_23, ...]。搜尋時先查每個 lexeme 出現在哪些 document,再根據 AND/OR/NOT 做集合運算。

Step 1: 基本搜尋

SELECT id, title, created_at

FROM articles

WHERE to_tsvector('english', title || ' ' || body)

@@ to_tsquery('english', 'database & performance');這個寫法可以動,但問題是每次查詢都重新計算 tsvector(full table scan + tokenization),10 萬行約 500ms,100 萬行約 5 秒。用 websearch_to_tsquery 可以處理更接近使用者習慣的輸入:

-- websearch_to_tsquery 支援引號短語和減號排除(PostgreSQL 11+)

SELECT id, title FROM articles

WHERE to_tsvector('english', title || ' ' || body)

@@ websearch_to_tsquery('english', '"full text search" -oracle postgresql');Step 2: 建立 tsvector 欄位

-- Generated Column(PostgreSQL 12+,推薦)

ALTER TABLE articles ADD COLUMN search_vector tsvector

GENERATED ALWAYS AS (

setweight(to_tsvector('english', coalesce(title, '')), 'A') ||

setweight(to_tsvector('english', coalesce(body, '')), 'B')

) STORED;

-- 權重:A(最高,標題)> B > C > D(最低)舊版本用 trigger:

ALTER TABLE articles ADD COLUMN search_vector tsvector;

CREATE OR REPLACE FUNCTION articles_search_vector_update() RETURNS trigger AS $$

BEGIN

NEW.search_vector :=

setweight(to_tsvector('english', coalesce(NEW.title, '')), 'A') ||

setweight(to_tsvector('english', coalesce(NEW.body, '')), 'B');

RETURN NEW;

END;

$$ LANGUAGE plpgsql;

CREATE TRIGGER trg_articles_search_vector

BEFORE INSERT OR UPDATE OF title, body ON articles

FOR EACH ROW EXECUTE FUNCTION articles_search_vector_update();

-- 初始化既有資料

UPDATE articles SET search_vector =

setweight(to_tsvector('english', coalesce(title, '')), 'A') ||

setweight(to_tsvector('english', coalesce(body, '')), 'B');正式環境一律用 Stored——查詢快(直接讀已計算的值)、可建 GIN/GiST index。

Step 3: GIN Index

CREATE INDEX idx_articles_search ON articles USING GIN(search_vector);GIN vs GiST:GIN 查詢快(精確查找)但建立慢、index 較大;GiST 建立快、index 小(2-3x)但查詢有 false positive。99% 場景用 GIN——大部分應用讀多寫少,且 GIN 的 fastupdate 機制已大幅改善寫入效能。

Step 4: 排序與相關性

SELECT id, title, ts_rank(search_vector, query) AS rank

FROM articles, to_tsquery('english', 'database & performance') AS query

WHERE search_vector @@ query

ORDER BY rank DESC LIMIT 20;ts_rank 考慮詞頻、權重、出現頻率。ts_rank_cd 額外考慮匹配詞之間的距離(cover density),適合 phrase search。用正規化參數避免長文件總是排在前面:ts_rank(search_vector, query, 1) 除以 log(文件長度)。

進階 Boosting:

SELECT id, title,

(

ts_rank(search_vector, query, 1) * 1.0

+ CASE WHEN title ILIKE '%database%' THEN 0.5 ELSE 0 END

+ (1.0 / (1 + EXTRACT(EPOCH FROM (now() - created_at)) / 86400))

) AS combined_rank

FROM articles, to_tsquery('english', 'database & performance') AS query

WHERE search_vector @@ query

ORDER BY combined_rank DESC LIMIT 20;Step 5: 多語言支援

PostgreSQL 內建英文、法文、德文等歐洲語言的 stemming。中日韓需要額外 extension:

-- zhparser(最成熟的中文分詞 extension)

CREATE EXTENSION zhparser;

CREATE TEXT SEARCH CONFIGURATION chinese (PARSER = zhparser);

ALTER TEXT SEARCH CONFIGURATION chinese ADD MAPPING FOR n,v,a,i,e,l WITH simple;

SELECT to_tsvector('chinese', '我們正在學習 PostgreSQL 的全文搜尋功能');如果 managed PostgreSQL 不支援安裝 extension,用 pg_bigm(bi-gram,不需分詞引擎):

CREATE EXTENSION pg_bigm;

CREATE INDEX idx_articles_title_bigm ON articles USING GIN(title gin_bigm_ops);

SELECT * FROM articles WHERE title LIKE '%全文搜尋%'; -- 走 index多語言混合搜尋的務實做法——用 english 處理英文 stemming、用 simple 處理中文 exact match:

ALTER TABLE articles ADD COLUMN search_vector tsvector

GENERATED ALWAYS AS (

setweight(to_tsvector('english', coalesce(title, '')), 'A') ||

setweight(to_tsvector('english', coalesce(body, '')), 'B') ||

setweight(to_tsvector('simple', coalesce(title, '')), 'C') ||

setweight(to_tsvector('simple', coalesce(body, '')), 'D')

) STORED;Step 6: Highlighting

SELECT id, title,

ts_headline('english', body, to_tsquery('english', 'database & performance'),

'StartSel=<mark>, StopSel=</mark>, MaxWords=50, MinWords=20, MaxFragments=3'

) AS snippet

FROM articles

WHERE search_vector @@ to_tsquery('english', 'database & performance')

ORDER BY ts_rank(search_vector, to_tsquery('english', 'database & performance')) DESC

LIMIT 20;注意:ts_headline 是 CPU 密集操作,先用 WHERE @@ LIMIT 篩選後再對少量結果做 highlight。

全站搜尋實作範例

CREATE TABLE articles (

id SERIAL PRIMARY KEY,

title VARCHAR(500) NOT NULL,

slug VARCHAR(500) UNIQUE NOT NULL,

body TEXT NOT NULL,

author_id INTEGER REFERENCES users(id),

category VARCHAR(100),

tags TEXT[],

published BOOLEAN DEFAULT FALSE,

published_at TIMESTAMPTZ,

created_at TIMESTAMPTZ DEFAULT now(),

search_vector tsvector GENERATED ALWAYS AS (

setweight(to_tsvector('english', coalesce(title, '')), 'A') ||

setweight(to_tsvector('english', coalesce(array_to_string(tags, ' '), '')), 'B') ||

setweight(to_tsvector('english', coalesce(body, '')), 'C')

) STORED

);

CREATE INDEX idx_articles_search ON articles USING GIN(search_vector);

CREATE INDEX idx_articles_published ON articles(published_at DESC) WHERE published = true;

CREATE INDEX idx_articles_tags ON articles USING GIN(tags) WHERE published = true;搜尋 Function:

CREATE OR REPLACE FUNCTION search_articles(

search_term TEXT, page_num INTEGER DEFAULT 1, page_size INTEGER DEFAULT 20

) RETURNS TABLE(

id INTEGER, title VARCHAR, slug VARCHAR, snippet TEXT,

category VARCHAR, tags TEXT[], published_at TIMESTAMPTZ, rank REAL, total_count BIGINT

) AS $$

DECLARE

query tsquery := websearch_to_tsquery('english', search_term);

offset_val INTEGER := (page_num - 1) * page_size;

BEGIN

RETURN QUERY

WITH matched AS (

SELECT a.id, a.title, a.slug, a.body, a.category, a.tags, a.published_at,

a.search_vector, ts_rank_cd(a.search_vector, query, 1) AS rank,

COUNT(*) OVER() AS total_count

FROM articles a

WHERE a.published = true AND a.search_vector @@ query

ORDER BY rank DESC LIMIT page_size OFFSET offset_val

)

SELECT m.id, m.title, m.slug,

ts_headline('english', m.body, query,

'StartSel=<mark>, StopSel=</mark>, MaxWords=40, MinWords=15, MaxFragments=2') AS snippet,

m.category, m.tags, m.published_at, m.rank, m.total_count

FROM matched m;

END;

$$ LANGUAGE plpgsql STABLE;效能基準(4 核 16GB RAM, GIN index):

| 文章數量 | 平均查詢 | p99 | Index 大小 |

|---|---|---|---|

| 10,000 | 1.2 ms | 3.5 ms | 12 MB |

| 100,000 | 4.8 ms | 15 ms | 120 MB |

| 1,000,000 | 18 ms | 52 ms | 1.1 GB |

| 10,000,000 | 85 ms | 210 ms | 10.5 GB |

100 萬篇文章 p99 在 52ms,非常舒適。1000 萬篇開始感受延遲。

PostgreSQL FTS 的限制

FTS 不夠的場景:(1)Fuzzy Matching——使用者打 “postgrsql” 找不到結果,pg_trgm 可以補充但不如 ES 成熟。(2)Faceted Search——搜尋 “laptop” 同時顯示品牌計數、價格區間計數。(3)超大規模——1000 萬+ 文件且需要複雜 ranking/synonym。(4)High Write Throughput——每秒上千筆 INSERT/UPDATE 需要立即可搜尋。

符合以上任何一個場景?考慮 Elasticsearch。

pg_trgm 作為 fuzzy search 的補充:

CREATE EXTENSION pg_trgm;

CREATE INDEX idx_articles_title_trgm ON articles USING GIN(title gin_trgm_ops);

SELECT title, similarity(title, 'postgrsql') AS sim

FROM articles WHERE title % 'postgrsql' ORDER BY sim DESC;JSONB 進階查詢

很多團隊為了存非結構化資料去用 MongoDB,但 PostgreSQL 的 JSONB 搭配 GIN index,在大部分場景下效能相當。

JSONB vs JSON

| 面向 | JSON | JSONB |

|---|---|---|

| 儲存格式 | 文字(保留原始格式) | 二進位(key 自動排序) |

| 寫入速度 | 快(不需解析) | 略慢(需要解析) |

| 讀取速度 | 慢(每次重新解析) | 快(直接讀取) |

| 可索引 | 不行 | 可以(GIN) |

| 支援 containment (@>) | 不行 | 支援 |

結論:除非需要保留 JSON 原始格式,否則一律用 JSONB。

JSONB 查詢範例

CREATE TABLE products (

id SERIAL PRIMARY KEY,

name VARCHAR(200),

data JSONB NOT NULL DEFAULT '{}'

);

INSERT INTO products (name, data) VALUES

('MacBook Pro', '{

"brand": "Apple", "category": "electronics",

"specs": {"cpu": "M3 Pro", "ram": "18GB", "storage": "512GB"},

"price": 59900,

"tags": ["laptop", "apple", "professional"],

"in_stock": true

}');存取欄位:

SELECT data->'specs' FROM products; -- → {"cpu": "M3 Pro", ...}(JSONB 型別)

SELECT data->>'brand' FROM products; -- → 'Apple'(TEXT 型別)

SELECT data->'specs'->>'cpu' FROM products; -- → 'M3 Pro'(巢狀存取)

SELECT data#>>'{specs,cpu}' FROM products; -- → 'M3 Pro'(路徑存取)條件查詢:

-- Containment 運算子(@>):最常用,可走 GIN index

SELECT * FROM products WHERE data @> '{"category": "electronics"}';

SELECT * FROM products WHERE data @> '{"specs": {"ram": "18GB"}}';

-- Existence 運算子(?):檢查 key 或 array 元素是否存在

SELECT * FROM products WHERE data ? 'brand';

SELECT * FROM products WHERE data->'tags' ? 'laptop';

-- 數值比較(注意要轉型)

SELECT * FROM products WHERE (data->>'price')::INTEGER > 50000;

-- jsonpath(PostgreSQL 12+)——更直覺的查詢語法

SELECT * FROM products WHERE data @? '$.tags[*] ? (@ == "professional")';JSONB + GIN Index:

-- 預設:支援 @>, ?, ?|, ?& 運算子

CREATE INDEX idx_products_data ON products USING GIN(data);

-- jsonb_path_ops:只支援 @>,但 index 小 2-3x、查詢快 ~2x

CREATE INDEX idx_products_data ON products USING GIN(data jsonb_path_ops);

-- 針對特定 key 建 index(更精準)

CREATE INDEX idx_products_brand ON products((data->>'brand'));

CREATE INDEX idx_products_price ON products(((data->>'price')::INTEGER));索引策略

| 類型 | 適用場景 | 範例 |

|---|---|---|

| B-tree | 等值、範圍、排序(預設) | WHERE id = 1, ORDER BY date |

| Hash | 純等值 | WHERE email = 'x@y.com' |

| GIN | Full-text、JSONB、Array | FTS @@、JSONB @> |

| GiST | 幾何、範圍 | PostGIS、range types |

| SP-GiST | 分區搜尋樹 | IP 範圍 |

| BRIN | 大型順序資料 | 時序資料、append-only |

Partial Index——只對符合條件的行建索引:

CREATE INDEX idx_active_users ON users(email) WHERE active = true;

-- 100 萬行只有 10 萬 active → index 只有完整 index 的 10%Expression Index——對計算結果建索引:

CREATE INDEX idx_lower_email ON users(lower(email));

-- 查詢必須完全匹配:WHERE lower(email) = 'user@example.com'Covering Index(INCLUDE)——附帶欄位實現 Index Only Scan:

CREATE INDEX idx_orders_customer ON orders(customer_id) INCLUDE (total, created_at);

-- SELECT customer_id, total, created_at WHERE customer_id = 12345 → Index Only ScanBRIN Index——極小的 index 處理極大的 table:

CREATE INDEX idx_sensor_recorded ON sensor_data USING BRIN(recorded_at)

WITH (pages_per_range = 128);

-- 1 億行:B-tree ~2GB vs BRIN ~2MB。適合物理排序的 append-only 資料。效能調校

EXPLAIN ANALYZE 讀懂執行計劃

EXPLAIN ANALYZE

SELECT a.title, u.name FROM articles a

JOIN users u ON a.author_id = u.id

WHERE a.published = true AND a.search_vector @@ to_tsquery('english', 'database')

ORDER BY a.published_at DESC LIMIT 20;Limit (cost=28.53..28.58 rows=20 width=68) (actual time=0.423..0.428 rows=20 loops=1)

-> Sort (cost=28.53..28.72 rows=75 width=68) (actual time=0.422..0.425 rows=20 loops=1)

-> Nested Loop (cost=12.52..26.88 rows=75 width=68) (actual time=0.152..0.365 rows=75 loops=1)

-> Bitmap Heap Scan on articles a (cost=12.08..18.50 rows=75 width=44)

-> Bitmap Index Scan on idx_articles_search (actual time=0.085..0.085 rows=80 loops=1)

-> Index Scan using users_pkey on users u (actual time=0.001..0.001 rows=1 loops=75)

Planning Time: 0.285 ms

Execution Time: 0.468 ms

讀懂重要欄位:cost=12.08..18.50(估計成本 startup..total)、rows=75(估計行數)、actual time=0.120..0.215(實際耗時 ms)、loops=1(執行次數)。當估計行數和實際行數差異大時,代表統計資訊過時,需要 ANALYZE table_name。

掃描方式好壞排序:

| 掃描類型 | 說明 | 評價 |

|---|---|---|

| Seq Scan | 全表掃描,逐行讀取 | 小表可以,大表危險 |

| Bitmap Index Scan + Bitmap Heap Scan | 先用 index 建 bitmap,再批次讀 table | 中等 |

| Index Scan | 用 index 找到行,再回 table 讀資料 | 好 |

| Index Only Scan | 只讀 index,不碰 table | 最好 |

常見效能殺手:

-- 1. 意外的 Seq Scan → 檢查是否缺 index

EXPLAIN ANALYZE SELECT * FROM users WHERE email = 'test@example.com';

-- 2. 估計行數和實際行數差異太大 → 更新統計

ANALYZE users;

-- 3. Sort 使用 external merge(記憶體不夠)→ 提高 work_mem

-- 看到 Sort Method: external merge Disk: 102400kB 就是問題

SET work_mem = '256MB';

-- 4. Nested Loop 的 loops 太大 → N+1 問題

-- 如果 loops=100000,代表 inner scan 執行了 10 萬次關鍵參數調校

# PostgreSQL 效能參數(16GB RAM, SSD, 4 cores)

shared_buffers = 4GB # RAM 的 25%,不超過 40%

effective_cache_size = 12GB # RAM 的 75%(含 OS page cache,影響 planner 決策)

work_mem = 128MB # per-operation,保守設定,特定查詢用 SET LOCAL 提高

maintenance_work_mem = 1GB # VACUUM/CREATE INDEX

random_page_cost = 1.1 # SSD(HDD 用 4.0)

effective_io_concurrency = 200 # SSD(HDD 用 2)

max_parallel_workers_per_gather = 2

max_parallel_workers = 4

autovacuum = on

autovacuum_naptime = 30

autovacuum_vacuum_scale_factor = 0.1

autovacuum_analyze_scale_factor = 0.05

autovacuum_vacuum_cost_limit = 2000不同規模的參數:

8GB 16GB 64GB 128GB

shared_buffers 2GB 4GB 16GB 32GB

effective_cache_size 6GB 12GB 48GB 96GB

work_mem 64MB 128MB 256MB 512MB

maintenance_work_mem 512MB 1GB 2GB 4GB

常見效能問題

N+1 Queries:ORM lazy loading 的陷阱。

# 錯誤:每篇文章各發一次 SELECT 查 author,20 篇 = 21 個查詢

articles = Article.objects.filter(published=True)[:20]

for article in articles:

print(article.author.name) # 觸發 SELECT name FROM users WHERE id = ?

# 正確:用 JOIN 一次搞定

articles = Article.objects.filter(published=True).select_related('author')[:20]-- SQL 層面:一次 JOIN 取代 N+1

SELECT a.*, u.name AS author_name

FROM articles a JOIN users u ON a.author_id = u.id

WHERE a.published = true LIMIT 20;Missing Index:

SELECT relname, seq_scan, seq_tup_read,

seq_tup_read / GREATEST(seq_scan, 1) AS avg_seq_tup_read

FROM pg_stat_user_tables WHERE seq_scan > 0

ORDER BY seq_tup_read DESC LIMIT 20;

-- avg_seq_tup_read 很大 → 需要加 indexTable Bloat:MVCC 的 dead tuples 累積導致表膨脹。

SELECT relname, n_dead_tup,

ROUND(n_dead_tup * 100.0 / GREATEST(n_live_tup + n_dead_tup, 1), 2) AS dead_pct

FROM pg_stat_user_tables WHERE n_dead_tup > 10000

ORDER BY n_dead_tup DESC;

-- dead_pct > 20% → 調 autovacuum 參數或手動 VACUUMLock Contention:

SELECT blocked.pid, blocked_activity.query AS blocked_query,

blocking.pid AS blocking_pid, blocking_activity.query AS blocking_query

FROM pg_locks blocked

JOIN pg_locks blocking ON blocked.transactionid = blocking.transactionid AND blocked.pid != blocking.pid

JOIN pg_stat_activity blocked_activity ON blocked.pid = blocked_activity.pid

JOIN pg_stat_activity blocking_activity ON blocking.pid = blocking_activity.pid

WHERE NOT blocked.granted;pg_stat_statements:找出慢查詢

CREATE EXTENSION IF NOT EXISTS pg_stat_statements;

SELECT query, calls,

ROUND(total_exec_time::NUMERIC, 2) AS total_time_ms,

ROUND((total_exec_time / calls)::NUMERIC, 2) AS avg_time_ms,

ROUND((shared_blks_hit * 100.0 /

GREATEST(shared_blks_hit + shared_blks_read, 1))::NUMERIC, 2) AS cache_hit_pct

FROM pg_stat_statements

ORDER BY total_exec_time DESC LIMIT 20;解讀方式:

total_exec_time大:系統整體花最多時間在這些查詢上。即使 avg_time 不長,calls 太多也會累積。avg_time_ms大:單次執行最慢的查詢,可能需要加 index 或重寫。cache_hit_pct低:大量讀磁碟而非快取,需要更多shared_buffers或更好的 index。

-- 找出 I/O bound 的查詢

SELECT query, calls, shared_blks_hit, shared_blks_read,

ROUND((shared_blks_hit * 100.0 /

GREATEST(shared_blks_hit + shared_blks_read, 1))::NUMERIC, 2) AS cache_hit_pct

FROM pg_stat_statements WHERE calls > 100

ORDER BY cache_hit_pct ASC LIMIT 20;

-- 重置統計(調校後重置,觀察新效能)

SELECT pg_stat_statements_reset();全局 cache hit ratio 應該 > 99%:

SELECT ROUND(sum(heap_blks_hit) * 100.0 /

GREATEST(sum(heap_blks_hit) + sum(heap_blks_read), 1), 2) AS cache_hit_ratio

FROM pg_statio_user_tables;常見問題與風險

-

Over-indexing:一張表 15 個 index,每次 INSERT 成本 10 倍以上。定期檢查未使用的 index:

SELECT indexrelname, idx_scan, pg_size_pretty(pg_relation_size(indexrelid)) AS size FROM pg_stat_user_indexes WHERE idx_scan = 0 AND schemaname = 'public' ORDER BY pg_relation_size(indexrelid) DESC; -

VACUUM 被阻擋:long-running transaction 阻擋 VACUUM 回收 dead tuples。設定

idle_in_transaction_session_timeout = '5min'避免。 -

Long-running Transactions:一個 transaction 開了 2 小時沒結束,之後產生的 dead tuples 全部無法回收——最隱蔽的效能殺手。

SELECT pid, now() - xact_start AS duration, query, state FROM pg_stat_activity WHERE xact_start IS NOT NULL ORDER BY xact_start LIMIT 10; -

work_mem 設太高:per-operation 不是 per-connection。

work_mem = 1GB+ 100 連線 = 最壞 100GB。全局保守,特定查詢用SET LOCAL work_mem = '512MB'。 -

FTS index 碎片化:GIN index 經大量 UPDATE 後碎片化。每月排程

REINDEX INDEX CONCURRENTLY idx_articles_search(PostgreSQL 12+,不鎖表)。 -

Connection exhaustion:沒有 PgBouncer,連線數爆掉。參考 基礎部署篇 設定。

快速檢查清單

部署新的 PostgreSQL 或接手既有系統時:

-- 1. pg_stat_statements 已啟用?

SELECT * FROM pg_available_extensions WHERE name = 'pg_stat_statements';

-- 2. 全局 cache hit ratio > 99%?

SELECT ROUND(sum(heap_blks_hit) * 100.0 /

GREATEST(sum(heap_blks_hit) + sum(heap_blks_read), 1), 2) AS ratio

FROM pg_statio_user_tables;

-- 3. 沒有 bloated tables?(dead_pct < 20%)

SELECT relname, n_dead_tup,

ROUND(n_dead_tup * 100.0 / GREATEST(n_live_tup + n_dead_tup, 1), 2) AS dead_pct

FROM pg_stat_user_tables WHERE n_dead_tup > 10000 ORDER BY dead_pct DESC;

-- 4. 沒有未使用的 index?

SELECT indexrelname, idx_scan, pg_size_pretty(pg_relation_size(indexrelid)) AS size

FROM pg_stat_user_indexes WHERE idx_scan = 0 AND schemaname = 'public'

ORDER BY pg_relation_size(indexrelid) DESC;

-- 5. 沒有 long-running transactions?

SELECT pid, now() - xact_start AS duration, state, query

FROM pg_stat_activity WHERE xact_start IS NOT NULL AND state != 'idle'

ORDER BY duration DESC;

-- 6. 連線使用率正常?

SELECT count(*) AS total,

count(*) FILTER (WHERE state = 'active') AS active,

count(*) FILTER (WHERE state = 'idle') AS idle,

count(*) FILTER (WHERE state = 'idle in transaction') AS idle_in_tx

FROM pg_stat_activity;

-- 7. autovacuum 正常運作?

SELECT relname, last_autovacuum, last_autoanalyze

FROM pg_stat_user_tables WHERE last_autovacuum IS NULL AND n_live_tup > 10000

ORDER BY n_live_tup DESC;延伸閱讀

- Database(PostgreSQL) — 基礎部署、PgBouncer、備份還原

- 資料庫全景 — 選型指南:什麼時候該用什麼資料庫

- Elasticsearch 深入 — 當 PostgreSQL FTS 不夠用的時候