Docker Compose 實戰模板:不要每次都從零開始

每次開新專案都在重新寫 docker-compose.yml?來,這裡有一套即用範本。

當團隊開始有三個以上的專案,你會發現大家的 docker-compose.yml 長得都差不多——但又不完全一樣。有人忘了設 health check,有人沒限制 log 大小結果磁碟爆掉,有人的 volume 命名完全隨機讓維運人員找不到資料在哪。標準化的 compose 模板不是為了限制彈性,而是為了讓 80% 的常見場景不需要思考就能正確部署,把精力留給那 20% 需要客製化的部分。

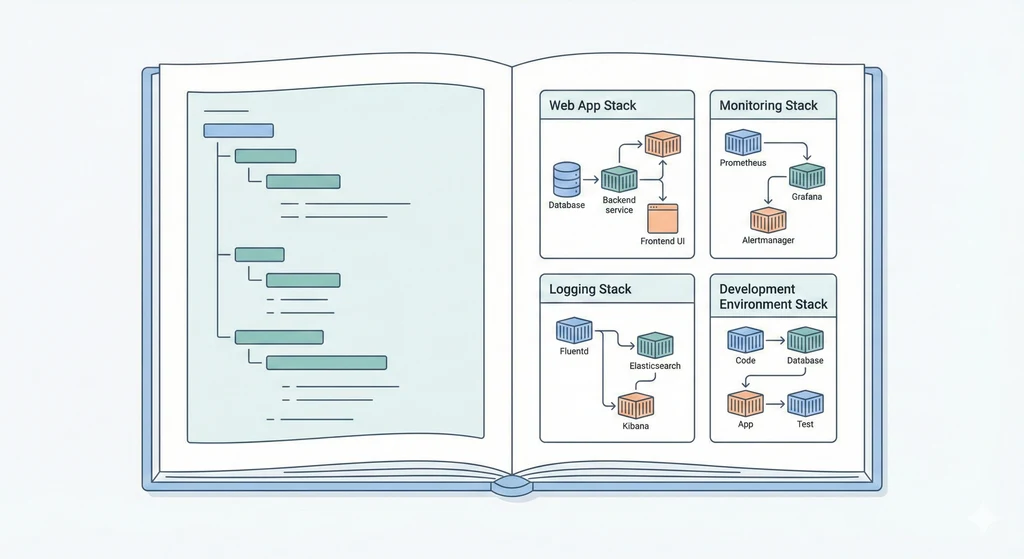

四套模板的定位與連接方式

flowchart TD subgraph DevEnv["模板 4: 開發環境"] DevDB[(PostgreSQL)] DevRedis[(Redis)] DevMinio[(MinIO)] DevMail[(Mailhog)] end subgraph AppStack["模板 1: Web App Stack"] Nginx[Nginx<br/>Reverse Proxy] App[Application<br/>API Server] DB[(PostgreSQL)] Cache[(Redis)] end subgraph MonStack["模板 2: 監控 Stack"] Prom[Prometheus] Grafana[Grafana] NodeExp[Node Exporter] end subgraph LogStack["模板 3: 日誌 Stack"] ES[(Elasticsearch)] Filebeat[Filebeat] Kibana[Kibana] end AppStack -->|metrics endpoint| MonStack AppStack -->|container logs| LogStack MonStack -->|self metrics| LogStack style DevEnv fill:#e8f5e9,stroke:#388e3c style AppStack fill:#e3f2fd,stroke:#1976d2 style MonStack fill:#fff3e0,stroke:#f57c00 style LogStack fill:#fce4ec,stroke:#c62828

開發環境用模板 4 在本機跑依賴服務,部署時用模板 1 跑正式的 App Stack,再疊加模板 2 和 3 做監控與日誌收集。四套模板各自獨立但可以透過 Docker network 互相對接。

模板 1: Web App Stack(Nginx + App + PostgreSQL + Redis)

這是最常見的 Web 應用架構。Nginx 做反向代理和靜態檔案服務、App 跑業務邏輯、PostgreSQL 存持久資料、Redis 做快取和 session 儲存。

# docker-compose.app.yml

version: "3.8"

services:

nginx:

image: nginx:1.25-alpine

ports:

- "80:80"

- "443:443"

volumes:

- ./nginx/conf.d:/etc/nginx/conf.d:ro

- ./nginx/ssl:/etc/nginx/ssl:ro

- static_files:/var/www/static:ro

depends_on:

app:

condition: service_healthy

networks:

- frontend

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost/health"]

interval: 30s

timeout: 5s

retries: 3

app:

image: ${APP_IMAGE:-myapp:latest}

env_file:

- .env

environment:

- DATABASE_URL=postgresql://${DB_USER}:${DB_PASSWORD}@db:5432/${DB_NAME}

- REDIS_URL=redis://cache:6379/0

volumes:

- static_files:/app/static

- upload_data:/app/uploads

depends_on:

db:

condition: service_healthy

cache:

condition: service_healthy

networks:

- frontend

- backend

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "5"

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 15s

timeout: 5s

retries: 3

start_period: 30s

deploy:

resources:

limits:

cpus: "1.0"

memory: 512M

reservations:

cpus: "0.25"

memory: 128M

db:

image: postgres:16-alpine

environment:

POSTGRES_USER: ${DB_USER}

POSTGRES_PASSWORD: ${DB_PASSWORD}

POSTGRES_DB: ${DB_NAME}

volumes:

- pg_data:/var/lib/postgresql/data

- ./db/init:/docker-entrypoint-initdb.d:ro

networks:

- backend

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"

healthcheck:

test: ["CMD-SHELL", "pg_isready -U ${DB_USER} -d ${DB_NAME}"]

interval: 10s

timeout: 5s

retries: 5

deploy:

resources:

limits:

cpus: "1.0"

memory: 1G

cache:

image: redis:7-alpine

command: redis-server --appendonly yes --maxmemory 256mb --maxmemory-policy allkeys-lru

volumes:

- redis_data:/data

networks:

- backend

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "5m"

max-file: "3"

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 3s

retries: 3

deploy:

resources:

limits:

cpus: "0.5"

memory: 300M

volumes:

pg_data:

name: ${PROJECT_NAME:-myapp}_pg_data

redis_data:

name: ${PROJECT_NAME:-myapp}_redis_data

static_files:

name: ${PROJECT_NAME:-myapp}_static

upload_data:

name: ${PROJECT_NAME:-myapp}_uploads

networks:

frontend:

name: ${PROJECT_NAME:-myapp}_frontend

backend:

name: ${PROJECT_NAME:-myapp}_backend

internal: true為什麼這樣配?

- Network 分離:

frontend網路給需要外部存取的服務(Nginx、App),backend標記為internal: true,只有 App 能連到 DB 和 Redis,Nginx 不能直接碰資料庫。這是最基本的網路隔離,對應 Network & DNS 提到的網段切分概念。 - Health check:每個服務都有 health check,

depends_on搭配condition: service_healthy確保啟動順序正確。沒有 health check 的 depends_on 只保證容器啟動,不保證服務就緒。 - Resource limits:用

deploy.resources限制每個容器的 CPU 和記憶體上限,避免某個服務吃掉所有資源導致其他服務被 OOM Kill。 - Named volumes:用

${PROJECT_NAME}前綴命名,多個專案在同一台 Host 上不會 volume 名稱衝突。

模板 2: 監控 Stack(Prometheus + Grafana + Node Exporter)

搭配 Metrics & Monitoring 使用。這套模板收集 Host 層級和容器層級的指標,透過 Grafana 視覺化。

# docker-compose.monitoring.yml

version: "3.8"

services:

prometheus:

image: prom/prometheus:v2.48.0

command:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention.time=30d"

- "--web.enable-lifecycle"

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml:ro

- ./prometheus/rules:/etc/prometheus/rules:ro

- prom_data:/prometheus

ports:

- "9090:9090"

networks:

- monitoring

- backend

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"

healthcheck:

test: ["CMD", "wget", "--spider", "-q", "http://localhost:9090/-/healthy"]

interval: 30s

timeout: 5s

retries: 3

deploy:

resources:

limits:

cpus: "1.0"

memory: 2G

grafana:

image: grafana/grafana:10.2.0

environment:

GF_SECURITY_ADMIN_USER: ${GRAFANA_USER:-admin}

GF_SECURITY_ADMIN_PASSWORD: ${GRAFANA_PASSWORD}

GF_INSTALL_PLUGINS: grafana-clock-panel,grafana-piechart-panel

volumes:

- grafana_data:/var/lib/grafana

- ./grafana/provisioning:/etc/grafana/provisioning:ro

- ./grafana/dashboards:/var/lib/grafana/dashboards:ro

ports:

- "3000:3000"

depends_on:

prometheus:

condition: service_healthy

networks:

- monitoring

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"

healthcheck:

test: ["CMD", "wget", "--spider", "-q", "http://localhost:3000/api/health"]

interval: 30s

timeout: 5s

retries: 3

node-exporter:

image: prom/node-exporter:v1.7.0

command:

- "--path.procfs=/host/proc"

- "--path.rootfs=/rootfs"

- "--path.sysfs=/host/sys"

- "--collector.filesystem.mount-points-exclude=^/(sys|proc|dev|host|etc)($$|/)"

volumes:

- /proc:/host/proc:ro

- /sys:/host/sys:ro

- /:/rootfs:ro

ports:

- "9100:9100"

networks:

- monitoring

restart: unless-stopped

deploy:

resources:

limits:

cpus: "0.2"

memory: 128M

cadvisor:

image: gcr.io/cadvisor/cadvisor:v0.47.2

volumes:

- /:/rootfs:ro

- /var/run:/var/run:ro

- /sys:/sys:ro

- /var/lib/docker/:/var/lib/docker:ro

ports:

- "8080:8080"

networks:

- monitoring

restart: unless-stopped

deploy:

resources:

limits:

cpus: "0.3"

memory: 256M

volumes:

prom_data:

name: monitoring_prom_data

grafana_data:

name: monitoring_grafana_data

networks:

monitoring:

name: monitoring

backend:

external: true

name: ${PROJECT_NAME:-myapp}_backend與 App Stack 對接:監控 Stack 的 Prometheus 加入 App Stack 的 backend 網路(宣告為 external),就能 scrape App 的 /metrics endpoint。你不需要把 App 的 port 暴露到 Host 上,Prometheus 透過 Docker 內部網路直接存取。

模板 3: 日誌 Stack(Elasticsearch + Filebeat + Kibana)

對應 Log Management 的 EFK/ELK 架構。Filebeat 收集容器日誌,送到 Elasticsearch 儲存,Kibana 提供搜尋和分析 UI。

# docker-compose.logging.yml

version: "3.8"

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.11.0

environment:

- discovery.type=single-node

- xpack.security.enabled=false

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- cluster.name=logging-cluster

volumes:

- es_data:/usr/share/elasticsearch/data

ports:

- "9200:9200"

networks:

- logging

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:9200/_cluster/health || exit 1"]

interval: 30s

timeout: 10s

retries: 5

start_period: 60s

deploy:

resources:

limits:

cpus: "2.0"

memory: 1G

filebeat:

image: docker.elastic.co/beats/filebeat:8.11.0

user: root

command: filebeat -e -strict.perms=false

volumes:

- ./filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml:ro

- /var/lib/docker/containers:/var/lib/docker/containers:ro

- /var/run/docker.sock:/var/run/docker.sock:ro

- filebeat_data:/usr/share/filebeat/data

depends_on:

elasticsearch:

condition: service_healthy

networks:

- logging

restart: unless-stopped

deploy:

resources:

limits:

cpus: "0.5"

memory: 256M

kibana:

image: docker.elastic.co/kibana/kibana:8.11.0

environment:

ELASTICSEARCH_HOSTS: '["http://elasticsearch:9200"]'

ports:

- "5601:5601"

depends_on:

elasticsearch:

condition: service_healthy

networks:

- logging

restart: unless-stopped

logging:

driver: json-file

options:

max-size: "10m"

max-file: "3"

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:5601/api/status || exit 1"]

interval: 30s

timeout: 10s

retries: 5

start_period: 120s

volumes:

es_data:

name: logging_es_data

filebeat_data:

name: logging_filebeat_data

networks:

logging:

name: loggingFilebeat 掛載 Docker 的 container log 目錄和 docker.sock,自動發現所有容器並收集日誌。比 Fluentd 更輕量,適合單機環境。如果需要更複雜的日誌處理(解析、轉換、路由),再考慮換成 Fluentd 或 Logstash。

模板 4: 開發環境(PostgreSQL + Redis + MinIO + Mailhog)

開發者不需要在本機安裝這些服務。一個 docker compose up -d 就把所有依賴跑起來,用完 docker compose down 清掉。乾淨、可重複、不污染本機環境。

# docker-compose.dev.yml

version: "3.8"

services:

db:

image: postgres:16-alpine

environment:

POSTGRES_USER: dev

POSTGRES_PASSWORD: devpassword

POSTGRES_DB: myapp_dev

ports:

- "5432:5432"

volumes:

- dev_pg_data:/var/lib/postgresql/data

- ./db/init:/docker-entrypoint-initdb.d:ro

healthcheck:

test: ["CMD-SHELL", "pg_isready -U dev"]

interval: 5s

timeout: 3s

retries: 5

cache:

image: redis:7-alpine

ports:

- "6379:6379"

volumes:

- dev_redis_data:/data

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 5s

timeout: 3s

retries: 3

minio:

image: minio/minio:latest

command: server /data --console-address ":9001"

environment:

MINIO_ROOT_USER: minioadmin

MINIO_ROOT_PASSWORD: minioadmin

ports:

- "9000:9000"

- "9001:9001"

volumes:

- dev_minio_data:/data

healthcheck:

test: ["CMD", "mc", "ready", "local"]

interval: 10s

timeout: 5s

retries: 3

mailhog:

image: mailhog/mailhog:latest

ports:

- "1025:1025"

- "8025:8025"

volumes:

dev_pg_data:

dev_redis_data:

dev_minio_data:MinIO 提供 S3 相容的物件儲存(對應 Storage Management),開發時不需要連上真正的 AWS S3。Mailhog 攔截所有寄出的 email 並提供 Web UI 檢視,開發時不怕誤寄信給真實使用者。Port 都直接映射出來,方便開發工具連接。

共通最佳實踐

.env 變數管理

把會變動的值(密碼、映像版本、專案名稱)都抽到 .env 檔:

# .env

PROJECT_NAME=myapp

APP_IMAGE=myapp:v1.2.3

DB_USER=appuser

DB_PASSWORD=strong-password-here

DB_NAME=myapp_production

GRAFANA_PASSWORD=another-strong-password.env不進 Git(加到.gitignore)- 提供

.env.example列出所有需要的變數和預設值 - 參考 Secrets & Config 的密碼管理策略

Volume 策略:Named Volumes vs Bind Mounts

flowchart LR subgraph Named["Named Volumes"] NV1["pg_data"] NV2["redis_data"] NV3["es_data"] end subgraph Bind["Bind Mounts"] BM1["./nginx/conf.d"] BM2["./prometheus/prometheus.yml"] BM3["./db/init"] end Named -->|用途| Data["持久化資料<br/>由容器管理<br/>不需要直接編輯"] Bind -->|用途| Config["設定檔<br/>由開發者管理<br/>需要頻繁修改"]

- Named volumes:給資料庫、快取、Prometheus 等需要持久化的資料。Docker 管理存放位置,備份用

docker volume命令操作。 - Bind mounts:給設定檔、初始化腳本、SSL 憑證。檔案在 Host 上直接編輯,容器讀取。記得加

:ro(read-only)防止容器意外修改。

Network 設計

flowchart TD Internet((Internet)) --> Nginx subgraph frontend["frontend network"] Nginx[Nginx] App[App] end subgraph backend["backend network (internal)"] App2[App] DB[(PostgreSQL)] Cache[(Redis)] end subgraph monitoring["monitoring network"] Prom[Prometheus] Grafana[Grafana] end App --- App2 Prom -.->|scrape /metrics| App2 style backend fill:#ffe0e0,stroke:#c62828 style frontend fill:#e0f0ff,stroke:#1976d2 style monitoring fill:#fff3e0,stroke:#f57c00

- frontend:面向外部的服務(Nginx、App)

- backend:內部服務(DB、Redis),設為

internal: true阻止外部存取 - monitoring:監控服務獨立網路,需要 scrape App 時加入 backend network

- 原則:每個服務只加入它需要的網路,最小權限

Health Check 配置

每個服務都應該有 health check。沒有 health check 的容器,Docker 只知道「process 在跑」,不知道「服務是否正常回應」。

# 常見 health check 範例

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 15s # 每 15 秒檢查一次

timeout: 5s # 5 秒內沒回應就算失敗

retries: 3 # 連續 3 次失敗才標記為 unhealthy

start_period: 30s # 啟動後等 30 秒再開始檢查(給服務啟動時間)Resource Limits

deploy:

resources:

limits:

cpus: "1.0"

memory: 512M

reservations:

cpus: "0.25"

memory: 128Mlimits:硬上限,超過就被限制(CPU throttle)或殺掉(OOM kill)reservations:軟下限,Docker 排程時保證至少有這麼多資源- 一定要設 memory limit,不然一個 memory leak 可以拖垮整台 Host

Logging Driver

logging:

driver: json-file

options:

max-size: "10m" # 單個 log 檔案最大 10MB

max-file: "3" # 最多保留 3 個檔案不設定的話,Docker 預設的 json-file driver 不會 rotate,log 會一直長大直到磁碟滿。這是新手最常踩到的坑之一。或者在 Container Runtime 的 daemon.json 設定全域預設值。

延伸閱讀

- Container Runtime:Docker Engine 基座設定與 daemon.json 基線

- Metrics & Monitoring:Prometheus + Grafana 的完整部署與 PromQL

- Log Management:EFK/ELK 日誌收集架構的深入解析

- Secrets & Config:.env 管理與 secrets 注入的進階策略