GitLab CI/CD 實戰模板:讓 Pipeline 成為團隊標準配備

每次新專案啟動,最浪費時間的事情之一就是從零寫 .gitlab-ci.yml。同一個團隊裡,有人的 lint job 用 npm run lint,有人用 npx eslint .;有人的 Docker build 放在 build stage,有人放在 deploy stage;有人設了 cache,有人沒設導致每次 CI 跑 5 分鐘裝 node_modules。這些不一致不只浪費時間,更會在出事時讓人搞不清楚「這個 repo 的 CI 到底在做什麼」。

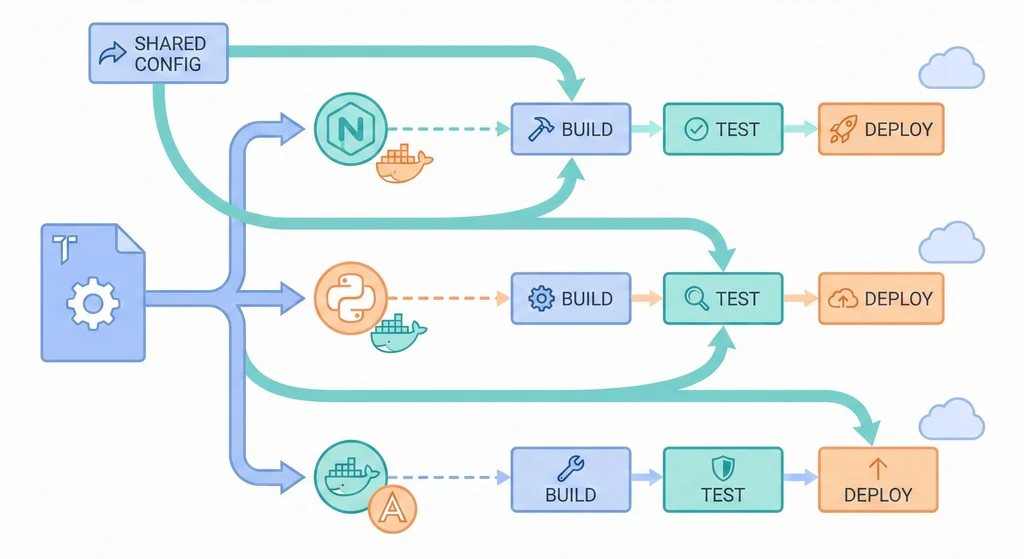

CI/CD 模板的價值在於:把經過驗證的 pipeline 邏輯抽成可重用的範本,新專案 fork 過來改幾個變數就能跑。品質統一、速度快、出問題時所有人都知道去哪裡看。這篇文章收集了實際用過的 GitLab CI/CD 模板,從最基礎的 Node.js 專案,到 Docker 映像檔建置、多環境部署、Monorepo 以及共用模板機制,每一個都是可以直接複製使用的完整範例。

架構概覽

flowchart TD Base["Base Template\n.gitlab-ci-base.yml"] --> StageBuild["Stage Template: Build\n.build-template.yml"] Base --> StageTest["Stage Template: Test\n.test-template.yml"] Base --> StageDeploy["Stage Template: Deploy\n.deploy-template.yml"] StageBuild --> ProjA["Project A Pipeline\n.gitlab-ci.yml"] StageTest --> ProjA StageDeploy --> ProjA StageBuild --> ProjB["Project B Pipeline\n.gitlab-ci.yml"] StageTest --> ProjB StageDeploy --> ProjB ProjA --> RunnerA["GitLab Runner\n執行 Pipeline"] ProjB --> RunnerA

架構概覽

flowchart TB subgraph Trigger["觸發來源"] Push[Branch Push] Tag[Git Tag] MR[Merge Request] Schedule[Scheduled Pipeline] end subgraph Pipeline["CI/CD Pipeline"] direction LR Lint[Stage: lint] --> Test[Stage: test] Test --> Build[Stage: build] Build --> Deploy[Stage: deploy] end subgraph Artifacts["產出物"] Image[Docker Image] Report[Test Report] Coverage[Coverage Report] end subgraph Environments["部署環境"] Dev[dev\n自動部署] Staging[staging\n手動觸發] Prod[prod\n審批後部署] end Trigger --> Pipeline Build --> Image Test --> Report Test --> Coverage Image -->|push| Harbor[Harbor Registry] Deploy --> Dev Deploy --> Staging Deploy --> Prod

每次 push、打 tag、建立 Merge Request 或排程觸發時,GitLab 會根據 .gitlab-ci.yml 的 rules 決定要執行哪些 job。Pipeline 依序通過 lint、test、build、deploy 四個 stage,過程中產生測試報告、覆蓋率報告和 Docker 映像檔。映像檔推到 Harbor,最後根據分支策略部署到對應的環境。

核心概念

-

.gitlab-ci.yml 結構:一個 pipeline 的核心由

stages(階段順序)、jobs(具體執行單位)、variables(變數)、rules(觸發條件)組成。每個 job 必須歸屬於一個 stage,同一個 stage 內的 job 預設平行執行,不同 stage 則依序執行。理解這個結構是寫好 CI 的前提:stage 定義「做事的順序」,job 定義「具體做什麼」,rules 定義「什麼時候做」。 -

GitLab Runner 類型:Runner 是實際執行 CI job 的機器。Shared Runner 由 GitLab 平台提供,所有專案共用,適合輕量任務但會排隊;Group Runner 歸屬於一個 Group,該 Group 下所有 repo 可用,適合同產品線共享資源;Project-specific Runner 專屬於單一 repo,適合有特殊需求的專案(例如需要 GPU 或大量記憶體)。選擇 Runner 類型直接影響 CI 的速度和穩定性。

-

Pipeline 觸發方式:

branch push是最常見的觸發,每次 push 到遠端分支就跑 CI。tag觸發通常用來啟動 Release 流程。merge_request_event只在建立或更新 MR 時觸發,適合跑 lint 和 test 但不 build/deploy。schedule是定時觸發,適合每天跑一次安全掃描或 nightly build。透過rules的if條件可以精確控制哪些 job 在哪種觸發方式下執行。 -

Artifacts 與 Cache 策略:Artifacts 是 job 產生的檔案,會傳遞給下游 job 並且可以在 GitLab UI 下載(例如測試報告、build 產出)。Cache 是加速用的,把不常變動的檔案(如

node_modules、.pip-cache)快取起來,下次 CI 不用重裝。兩者的關鍵差異:artifacts 是「這次 pipeline 的產出」,cache 是「跨 pipeline 的加速」。搞混這兩個會導致 pipeline 不穩定或浪費儲存空間。 -

Environment 與部署審批:GitLab 的

environment關鍵字定義了部署目標,搭配when: manual和allow_failure: false可以實現人工審批流程。設定 Protected Environment 後,只有特定角色(例如 Maintainer)才能觸發 prod 部署。這確保了程式碼從 dev → staging → prod 的過程中有適當的把關。 -

Include 與模板繼承:

include可以從其他檔案或 repo 引入 CI 設定。extends讓 job 繼承另一個 job 的所有設定並覆寫特定欄位。!reference則可以引用某個 job 的特定區塊(例如只取before_script)。這三個機制是建立共用 CI 模板的基礎,避免每個 repo 各自維護一份.gitlab-ci.yml。

實戰模板

模板一:Node.js 專案(lint → test → build → deploy)

這是最常見的前後端 Node.js 專案 pipeline,包含完整的 lint、test、build、deploy 四個 stage,以及 cache 和 artifacts 設定。

# .gitlab-ci.yml - Node.js 完整 Pipeline

variables:

SERVICE_NAME: "myapp-api"

REGISTRY: "harbor.example.com"

IMAGE: "${REGISTRY}/ec/${SERVICE_NAME}"

NODE_VERSION: "20"

stages:

- lint

- test

- build

- deploy

# ===== 全域 Cache 設定 =====

default:

cache:

key:

files:

- package-lock.json

paths:

- node_modules/

policy: pull

# ===== Lint =====

lint:eslint:

stage: lint

image: node:${NODE_VERSION}-alpine

cache:

key:

files:

- package-lock.json

paths:

- node_modules/

policy: pull-push # 第一個 job 負責填充 cache

script:

- npm ci --ignore-scripts

- npm run lint

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH == "main"

lint:type-check:

stage: lint

image: node:${NODE_VERSION}-alpine

script:

- npm ci --ignore-scripts

- npx tsc --noEmit

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH == "main"

# ===== Test =====

test:unit:

stage: test

image: node:${NODE_VERSION}-alpine

services:

- postgres:16-alpine

- redis:7-alpine

variables:

POSTGRES_DB: test_db

POSTGRES_USER: test_user

POSTGRES_PASSWORD: test_pass

DATABASE_URL: "postgresql://test_user:test_pass@postgres:5432/test_db"

REDIS_URL: "redis://redis:6379"

script:

- npm ci

- npm run test:unit -- --coverage

coverage: '/All files[^|]*\|[^|]*\s+([\d\.]+)/'

artifacts:

when: always

reports:

junit: junit-report.xml

coverage_report:

coverage_format: cobertura

path: coverage/cobertura-coverage.xml

expire_in: 7 days

test:e2e:

stage: test

image: node:${NODE_VERSION}-alpine

services:

- postgres:16-alpine

variables:

POSTGRES_DB: test_db

POSTGRES_USER: test_user

POSTGRES_PASSWORD: test_pass

DATABASE_URL: "postgresql://test_user:test_pass@postgres:5432/test_db"

script:

- npm ci

- npm run test:e2e

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

artifacts:

when: on_failure

paths:

- test-results/

expire_in: 3 days

# ===== Build =====

build:docker:

stage: build

image: docker:24

services:

- docker:24-dind

variables:

DOCKER_TLS_CERTDIR: "/certs"

before_script:

- docker login -u $HARBOR_ROBOT_USER -p $HARBOR_ROBOT_TOKEN $REGISTRY

script:

- docker build

--build-arg NODE_VERSION=${NODE_VERSION}

--cache-from ${IMAGE}:latest

-t ${IMAGE}:${CI_COMMIT_SHA}

-t ${IMAGE}:${CI_COMMIT_REF_SLUG}

.

- docker push ${IMAGE}:${CI_COMMIT_SHA}

- docker push ${IMAGE}:${CI_COMMIT_REF_SLUG}

- |

if [ -n "$CI_COMMIT_TAG" ]; then

docker tag ${IMAGE}:${CI_COMMIT_SHA} ${IMAGE}:${CI_COMMIT_TAG}

docker push ${IMAGE}:${CI_COMMIT_TAG}

fi

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_COMMIT_TAG

# ===== Deploy =====

deploy:dev:

stage: deploy

image: alpine:latest

environment:

name: dev

url: https://${SERVICE_NAME}.dev.example.com

before_script:

- apk add --no-cache openssh-client

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

script:

- ssh -o StrictHostKeyChecking=no deploy@dev-host

"cd /opt/stacks/${SERVICE_NAME} &&

sed -i 's|image:.*|image: ${IMAGE}:${CI_COMMIT_SHA}|' docker-compose.yml &&

docker compose pull &&

docker compose up -d --remove-orphans"

- sleep 5

- curl -sf "https://${SERVICE_NAME}.dev.example.com/health"

rules:

- if: $CI_COMMIT_BRANCH == "main"這個模板的重點:lint stage 裡有兩個 job(eslint 和 type-check)會平行執行,節省時間。test stage 連接了 PostgreSQL 和 Redis service container,模擬真實環境。Build 使用 --cache-from 加速 Docker build。Deploy 包含 health check,失敗時能立即發現。

模板二:Docker 專案(build image → push Harbor → deploy)

適用於純 Docker 化的服務,強調映像檔的標記策略和推送流程。

# .gitlab-ci.yml - Docker Build & Deploy Pipeline

variables:

HARBOR_HOST: "harbor.example.com"

HARBOR_PROJECT: "ec"

SERVICE_NAME: "payment-service"

IMAGE: "${HARBOR_HOST}/${HARBOR_PROJECT}/${SERVICE_NAME}"

stages:

- build

- scan

- deploy

# ===== Build =====

build:

stage: build

image: docker:24

services:

- docker:24-dind

variables:

DOCKER_TLS_CERTDIR: "/certs"

DOCKER_BUILDKIT: "1"

before_script:

- docker login -u $HARBOR_ROBOT_USER -p $HARBOR_ROBOT_TOKEN $HARBOR_HOST

script:

# 多階段 tag 策略

- >

docker build

--build-arg BUILD_DATE=$(date -u +"%Y-%m-%dT%H:%M:%SZ")

--build-arg VCS_REF=${CI_COMMIT_SHA}

--label org.opencontainers.image.created=$(date -u +"%Y-%m-%dT%H:%M:%SZ")

--label org.opencontainers.image.revision=${CI_COMMIT_SHA}

-t ${IMAGE}:${CI_COMMIT_SHA}

.

# Commit SHA tag(不可變,用於追蹤)

- docker push ${IMAGE}:${CI_COMMIT_SHA}

# Branch slug tag(可變,用於環境對應)

- docker tag ${IMAGE}:${CI_COMMIT_SHA} ${IMAGE}:${CI_COMMIT_REF_SLUG}

- docker push ${IMAGE}:${CI_COMMIT_REF_SLUG}

# Semver tag(如果有)

- |

if [ -n "$CI_COMMIT_TAG" ]; then

docker tag ${IMAGE}:${CI_COMMIT_SHA} ${IMAGE}:${CI_COMMIT_TAG}

docker push ${IMAGE}:${CI_COMMIT_TAG}

# 同時更新 latest(僅限 release tag)

docker tag ${IMAGE}:${CI_COMMIT_SHA} ${IMAGE}:latest

docker push ${IMAGE}:latest

fi

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_COMMIT_TAG =~ /^v\d+/

# ===== Security Scan =====

scan:trivy:

stage: scan

image:

name: aquasec/trivy:latest

entrypoint: [""]

variables:

TRIVY_USERNAME: $HARBOR_ROBOT_USER

TRIVY_PASSWORD: $HARBOR_ROBOT_TOKEN

script:

- trivy image

--exit-code 1

--severity CRITICAL,HIGH

--ignore-unfixed

--format table

${IMAGE}:${CI_COMMIT_SHA}

allow_failure: true

artifacts:

reports:

container_scanning: trivy-report.json

expire_in: 7 days

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_COMMIT_TAG

# ===== Deploy =====

deploy:

stage: deploy

image: alpine:latest

before_script:

- apk add --no-cache openssh-client curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

script:

- |

TARGET_HOST=""

IMAGE_TAG=""

case "$CI_COMMIT_BRANCH" in

main) TARGET_HOST="$DEV_HOST"; IMAGE_TAG="${CI_COMMIT_SHA}" ;;

esac

if [ -n "$CI_COMMIT_TAG" ]; then

TARGET_HOST="$STAGING_HOST"

IMAGE_TAG="${CI_COMMIT_TAG}"

fi

- ssh -o StrictHostKeyChecking=no deploy@${TARGET_HOST}

"cd /opt/stacks/${SERVICE_NAME} &&

IMAGE_TAG=${IMAGE_TAG} docker compose pull &&

IMAGE_TAG=${IMAGE_TAG} docker compose up -d --remove-orphans"

- sleep 10

- curl -sf "https://${SERVICE_NAME}.example.com/health" || exit 1

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_COMMIT_TAG =~ /^v\d+/這個模板的亮點:build job 使用 OCI label 標記映像檔的 build 時間和 commit,方便日後追蹤。scan stage 用 Trivy 做安全掃描,設定 allow_failure: true 讓掃描不擋住部署但仍產出報告。deploy 根據觸發方式自動決定目標環境和 image tag。

模板三:多環境部署(dev 自動、staging 手動、prod 審批)

這是最貼近真實團隊需求的部署策略模板,三個環境各有不同的觸發條件和安全等級。

# .gitlab-ci.yml - 多環境部署策略

variables:

SERVICE_NAME: "order-api"

REGISTRY: "harbor.example.com"

IMAGE: "${REGISTRY}/ec/${SERVICE_NAME}"

stages:

- lint

- test

- build

- deploy_dev

- deploy_staging

- deploy_prod

# ===== Lint & Test(省略,同模板一)=====

include:

- local: '.gitlab/ci/lint-test.yml'

# ===== Build =====

build:

stage: build

image: docker:24

services:

- docker:24-dind

before_script:

- docker login -u $HARBOR_ROBOT_USER -p $HARBOR_ROBOT_TOKEN $REGISTRY

script:

- docker build -t ${IMAGE}:${CI_COMMIT_SHA} .

- docker push ${IMAGE}:${CI_COMMIT_SHA}

- |

if [ -n "$CI_COMMIT_TAG" ]; then

docker tag ${IMAGE}:${CI_COMMIT_SHA} ${IMAGE}:${CI_COMMIT_TAG}

docker push ${IMAGE}:${CI_COMMIT_TAG}

fi

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_COMMIT_TAG

# ===== Deploy to Dev(自動)=====

deploy:dev:

stage: deploy_dev

image: alpine:latest

environment:

name: dev

url: https://${SERVICE_NAME}.dev.example.com

before_script:

- apk add --no-cache openssh-client curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

script:

- ssh deploy@${DEV_HOST}

"cd /opt/stacks/${SERVICE_NAME} &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose pull &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose up -d"

- sleep 5

- curl -sf "https://${SERVICE_NAME}.dev.example.com/health"

rules:

- if: $CI_COMMIT_BRANCH == "main"

# dev 環境:push 到 main 自動部署,不需要人工介入

# ===== Deploy to Staging(手動觸發)=====

deploy:staging:

stage: deploy_staging

image: alpine:latest

environment:

name: staging

url: https://${SERVICE_NAME}.staging.example.com

before_script:

- apk add --no-cache openssh-client curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

script:

- ssh deploy@${STAGING_HOST}

"cd /opt/stacks/${SERVICE_NAME} &&

IMAGE_TAG=${CI_COMMIT_TAG} docker compose pull &&

IMAGE_TAG=${CI_COMMIT_TAG} docker compose up -d"

- sleep 10

- curl -sf "https://${SERVICE_NAME}.staging.example.com/health"

rules:

- if: $CI_COMMIT_TAG =~ /^v\d+\.\d+\.\d+$/

when: manual

# staging 環境:打 tag 後手動觸發,QA 決定何時部署

allow_failure: false # 必須成功才能繼續到 prod

# ===== Deploy to Prod(需要審批)=====

deploy:prod:

stage: deploy_prod

image: alpine:latest

environment:

name: prod

url: https://${SERVICE_NAME}.example.com

deployment_tier: production

before_script:

- apk add --no-cache openssh-client curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

script:

- echo "Deploying ${SERVICE_NAME}:${CI_COMMIT_TAG} to production..."

- ssh deploy@${PROD_HOST}

"cd /opt/stacks/${SERVICE_NAME} &&

IMAGE_TAG=${CI_COMMIT_TAG} docker compose pull &&

IMAGE_TAG=${CI_COMMIT_TAG} docker compose up -d"

- sleep 15

- curl -sf "https://${SERVICE_NAME}.example.com/health"

- echo "Production deploy successful."

rules:

- if: $CI_COMMIT_TAG =~ /^v\d+\.\d+\.\d+$/

when: manual

# prod 環境:打 tag 後手動觸發

# 搭配 GitLab Protected Environment,只有 Maintainer 可以觸發

needs:

- deploy:staging # 必須先成功部署到 staging這個模板實現了漸進式部署:dev 全自動,staging 手動觸發但不需審批,prod 手動觸發且透過 Protected Environment 限制只有特定角色可以操作。needs 確保了 prod 部署一定在 staging 之後。這種設計讓開發速度和部署安全取得平衡。

模板四:Monorepo Pipeline(只建置變更的服務)

當多個服務放在同一個 repo(monorepo)時,不應該每次 push 都 build 所有服務。透過 changes 關鍵字,可以只觸發有修改的服務的 pipeline。

# .gitlab-ci.yml - Monorepo Pipeline

variables:

REGISTRY: "harbor.example.com"

stages:

- lint

- test

- build

- deploy

# ===== 共用模板 =====

.docker_build_template: &docker_build

stage: build

image: docker:24

services:

- docker:24-dind

before_script:

- docker login -u $HARBOR_ROBOT_USER -p $HARBOR_ROBOT_TOKEN $REGISTRY

.deploy_template: &deploy

stage: deploy

image: alpine:latest

before_script:

- apk add --no-cache openssh-client curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

# ===== API Service =====

lint:api:

stage: lint

image: node:20-alpine

script:

- cd services/api && npm ci --ignore-scripts && npm run lint

rules:

- changes:

- services/api/**/*

- packages/shared/**/* # 共用套件變更也要觸發

test:api:

stage: test

image: node:20-alpine

services:

- postgres:16-alpine

variables:

POSTGRES_DB: test

POSTGRES_USER: test

POSTGRES_PASSWORD: test

DATABASE_URL: "postgresql://test:test@postgres:5432/test"

script:

- cd services/api && npm ci && npm test

rules:

- changes:

- services/api/**/*

- packages/shared/**/*

build:api:

<<: *docker_build

variables:

SERVICE_NAME: "api"

IMAGE: "${REGISTRY}/ec/api"

script:

- docker build -t ${IMAGE}:${CI_COMMIT_SHA} -f services/api/Dockerfile .

- docker push ${IMAGE}:${CI_COMMIT_SHA}

rules:

- if: $CI_COMMIT_BRANCH == "main"

changes:

- services/api/**/*

- packages/shared/**/*

deploy:api:

<<: *deploy

environment:

name: dev

script:

- ssh deploy@${DEV_HOST}

"cd /opt/stacks/api &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose pull &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose up -d"

rules:

- if: $CI_COMMIT_BRANCH == "main"

changes:

- services/api/**/*

- packages/shared/**/*

# ===== Worker Service =====

lint:worker:

stage: lint

image: python:3.12-slim

script:

- cd services/worker && pip install ruff && ruff check .

rules:

- changes:

- services/worker/**/*

test:worker:

stage: test

image: python:3.12-slim

script:

- cd services/worker

- pip install -r requirements.txt -r requirements-dev.txt

- pytest --cov=. --cov-report=xml

coverage: '/TOTAL.*\s(\d+)%/'

rules:

- changes:

- services/worker/**/*

build:worker:

<<: *docker_build

variables:

SERVICE_NAME: "worker"

IMAGE: "${REGISTRY}/ec/worker"

script:

- docker build -t ${IMAGE}:${CI_COMMIT_SHA} -f services/worker/Dockerfile .

- docker push ${IMAGE}:${CI_COMMIT_SHA}

rules:

- if: $CI_COMMIT_BRANCH == "main"

changes:

- services/worker/**/*

deploy:worker:

<<: *deploy

environment:

name: dev

script:

- ssh deploy@${DEV_HOST}

"cd /opt/stacks/worker &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose pull &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose up -d"

rules:

- if: $CI_COMMIT_BRANCH == "main"

changes:

- services/worker/**/*

# ===== Admin Frontend =====

build:admin:

<<: *docker_build

variables:

SERVICE_NAME: "admin"

IMAGE: "${REGISTRY}/ec/admin"

script:

- docker build -t ${IMAGE}:${CI_COMMIT_SHA} -f services/admin/Dockerfile .

- docker push ${IMAGE}:${CI_COMMIT_SHA}

rules:

- if: $CI_COMMIT_BRANCH == "main"

changes:

- services/admin/**/*

- packages/ui-components/**/* # 共用 UI 元件變更Monorepo 的關鍵在 changes 規則:只有當對應目錄下的檔案有變更時,該服務的 job 才會執行。注意共用套件(如 packages/shared)的變更也要能觸發依賴它的服務。YAML anchor(&docker_build)避免了重複撰寫相同的 build 設定。

模板五:共用 CI 模板(include from 另一個 repo)

當團隊有多個 repo 時,與其在每個 repo 維護各自的 .gitlab-ci.yml,不如把共用的 pipeline 邏輯抽成獨立的模板 repo,各專案透過 include 引入。

模板 Repo(infra/ci-templates)的結構:

infra/ci-templates/

templates/

nodejs.yml # Node.js 專案的標準 pipeline

python.yml # Python 專案的標準 pipeline

docker-build.yml # Docker build & push 邏輯

deploy.yml # 部署邏輯

共用模板檔案(templates/nodejs.yml):

# templates/nodejs.yml - Node.js 共用 CI 模板

# 使用方式:在專案的 .gitlab-ci.yml 裡 include 這個檔案

spec:

inputs:

node_version:

default: "20"

service_name:

registry:

default: "harbor.example.com"

registry_project:

default: "ec"

---

variables:

IMAGE: "$[[ inputs.registry ]]/$[[ inputs.registry_project ]]/$[[ inputs.service_name ]]"

stages:

- lint

- test

- build

- deploy

.node_base:

image: node:$[[ inputs.node_version ]]-alpine

cache:

key:

files:

- package-lock.json

paths:

- node_modules/

lint:

extends: .node_base

stage: lint

script:

- npm ci --ignore-scripts

- npm run lint

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH == "main"

test:

extends: .node_base

stage: test

script:

- npm ci

- npm test -- --coverage

coverage: '/All files[^|]*\|[^|]*\s+([\d\.]+)/'

artifacts:

reports:

coverage_report:

coverage_format: cobertura

path: coverage/cobertura-coverage.xml

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH == "main"

build:

stage: build

image: docker:24

services:

- docker:24-dind

before_script:

- docker login -u $HARBOR_ROBOT_USER -p $HARBOR_ROBOT_TOKEN $[[ inputs.registry ]]

script:

- docker build -t ${IMAGE}:${CI_COMMIT_SHA} .

- docker push ${IMAGE}:${CI_COMMIT_SHA}

- |

if [ -n "$CI_COMMIT_TAG" ]; then

docker tag ${IMAGE}:${CI_COMMIT_SHA} ${IMAGE}:${CI_COMMIT_TAG}

docker push ${IMAGE}:${CI_COMMIT_TAG}

fi

rules:

- if: $CI_COMMIT_BRANCH == "main"

- if: $CI_COMMIT_TAG

deploy:dev:

stage: deploy

image: alpine:latest

environment:

name: dev

url: https://$[[ inputs.service_name ]].dev.example.com

before_script:

- apk add --no-cache openssh-client curl

- eval $(ssh-agent -s)

- echo "$SSH_PRIVATE_KEY" | ssh-add -

script:

- ssh deploy@${DEV_HOST}

"cd /opt/stacks/$[[ inputs.service_name ]] &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose pull &&

IMAGE_TAG=${CI_COMMIT_SHA} docker compose up -d"

- sleep 5

- curl -sf "https://$[[ inputs.service_name ]].dev.example.com/health"

rules:

- if: $CI_COMMIT_BRANCH == "main"使用共用模板的專案 .gitlab-ci.yml:

# .gitlab-ci.yml - 使用共用模板

include:

- project: 'infra/ci-templates'

ref: main

file: 'templates/nodejs.yml'

inputs:

service_name: "notification-api"

node_version: "20"

registry_project: "ec"

# 可以覆寫或新增 job

test:

services:

- postgres:16-alpine

- redis:7-alpine

variables:

DATABASE_URL: "postgresql://test:test@postgres:5432/test"

REDIS_URL: "redis://redis:6379"使用共用模板的好處:每個專案的 .gitlab-ci.yml 只有 10 幾行,改變數就好。模板 repo 更新後,所有引用它的專案下次 CI 就會自動套用新版邏輯。如果某個專案有特殊需求,可以在自己的 .gitlab-ci.yml 裡覆寫特定 job。

變數管理:.env 注入、CI/CD Variables、Vault 整合

Pipeline 裡的 secret 管理是最容易出問題的環節。以下是從簡單到進階的三種方式。

# ===== 方式一:GitLab CI/CD Variables =====

# 在 GitLab UI 的 Settings > CI/CD > Variables 設定

# 適合少量、不常變動的 secret(如 Harbor token、SSH key)

deploy:

script:

# $HARBOR_ROBOT_TOKEN 從 CI/CD Variables 自動注入

- docker login -u $HARBOR_ROBOT_USER -p $HARBOR_ROBOT_TOKEN $REGISTRY

# 設為 "Protected" 的變數只在 protected branch/tag 的 pipeline 可用

# 設為 "Masked" 的變數不會出現在 pipeline log 裡

# ===== 方式二:.env 檔案注入 =====

# 適合環境設定檔,不同環境有不同的值

deploy:dev:

script:

# 從 CI/CD Variables 取得 base64 編碼的 .env 內容

- echo "$DEV_ENV_FILE" | base64 -d > .env

- scp .env deploy@${DEV_HOST}:/opt/stacks/${SERVICE_NAME}/.env

- ssh deploy@${DEV_HOST}

"cd /opt/stacks/${SERVICE_NAME} && docker compose up -d"

after_script:

- rm -f .env # 清理本地的 .env 檔案

# ===== 方式三:HashiCorp Vault 整合 =====

# 適合大量 secret、需要自動輪換、需要稽核紀錄的場景

deploy:prod:

id_tokens:

VAULT_ID_TOKEN:

aud: https://vault.example.com

secrets:

DATABASE_URL:

vault: ec/prod/database/url@secrets

file: false

API_SECRET_KEY:

vault: ec/prod/api/secret-key@secrets

file: false

script:

- echo "DATABASE_URL=${DATABASE_URL}" >> .env

- echo "API_SECRET_KEY=${API_SECRET_KEY}" >> .env

- scp .env deploy@${PROD_HOST}:/opt/stacks/${SERVICE_NAME}/.env

- ssh deploy@${PROD_HOST}

"cd /opt/stacks/${SERVICE_NAME} && docker compose up -d"

after_script:

- rm -f .env三種方式的取捨:CI/CD Variables 最簡單但不適合大量 secret;.env 檔案注入靈活但需要注意不要讓 .env 出現在 artifacts 或 log 裡;Vault 整合最安全但設定較複雜。小團隊可以從 CI/CD Variables 開始,隨著 secret 數量增加再遷移到 Vault。

常見問題與風險

-

Pipeline 太慢(cache 沒設好、stage 沒平行化):最常見的問題是每次 CI 都重新跑

npm install或pip install,明明套件沒變還要花 2-3 分鐘裝。解法是設定 cache,用package-lock.json或requirements.txt的檔案 hash 作為 key,只有依賴變更時才重裝。另一個常見問題是所有 job 都串行執行,其實 lint:eslint 和 lint:type-check 可以放在同一個 stage 平行跑,立刻省一半時間。 -

Runner 資源不足導致 job pending:如果所有專案共用一組 Shared Runner,高峰時段 CI job 會排隊等待,出現

This job is stuck because the project doesn't have any runners online assigned to it的狀態。解法:為關鍵專案設置專屬的 Group/Project Runner,或者增加 Runner 的concurrent設定值。也可以考慮 autoscaling Runner,根據負載自動擴縮。 -

Docker-in-Docker(DinD)的安全與效能問題:在 CI 裡用

docker:24-dindservice 來 build Docker image 是最常見的做法,但它需要 privileged mode,等於 Runner 上跑的容器有 root 等級的權限。如果有惡意的.gitlab-ci.yml被 merge 進來,可以存取 Runner 主機的任何資源。替代方案:使用 Kaniko(不需要 Docker daemon)或 Buildah(rootless build),或者在 Runner 層級用--docker-privileged=false搭配 Docker socket bind mount(仍有風險但比 DinD 小一些)。 -

Secret 洩漏到 pipeline log:在 script 裡用

echo $DATABASE_URLdebug 時,如果這個變數沒有設成 Masked,密碼就會出現在 CI log 裡。更隱蔽的問題是:有些工具會在 error message 裡輸出連線字串(包含密碼),例如 PostgreSQL 連線失敗時的錯誤訊息。解法:所有 secret 類型的 CI/CD Variable 都設為 Masked + Protected;使用after_script清理含有敏感資訊的暫存檔案;定期檢查 pipeline log 是否有意外洩漏。 -

cache 在不同 Runner 之間不共享:GitLab 的 cache 預設存放在 Runner 本地,如果這次 job 跑在 Runner A,下次跑在 Runner B,cache 就無效。解法:設定分散式 cache backend(如 S3 或 MinIO),或者用

tags把 job 固定在特定 Runner 上跑。 -

rules 條件太複雜導致 job 意外執行或不執行:一個 job 同時有

only/except(舊語法)和rules(新語法),或者多個rules條件互相衝突,導致行為不可預測。建議:統一使用rules語法,不要混用舊語法;每個 job 的 rules 不超過 3 條;用CI Lint功能在提交前驗證.gitlab-ci.yml是否正確。

優點

- 新專案幾分鐘就能有完整的 CI/CD pipeline,不需要從零開始

- 團隊所有 repo 的 pipeline 邏輯統一,降低認知負擔

- 共用模板集中維護,更新一次就能惠及所有專案

- 多環境部署策略有明確的安全分級(自動、手動、審批)

缺點 / 限制

- 共用模板過於嚴格會限制特殊專案的靈活性

- GitLab CI 的 YAML 語法有一定的學習門檻,特別是

rules、include、extends的交互作用 - Docker-in-Docker 的效能和安全仍然是痛點

- Monorepo 的

changes規則在 merge commit 時可能判斷不準確